The cost of scientific publishing: update and call for action

Tom Olijhoek - May 16, 2014 in Call to Action

Opening knowledge is great. Sharing knowledge is vital. In the past, publishers were the sole mediators for the dissemination of knowledge in printed form. In our, digital age, sharing has become easier thanks to the internet. Yet, although all areas of society have embraced the internet as THE sharing medium, scientific publishing has lagged far behind. Nowhere else are the advantages of sharing knowledge so obvious as in science: but instead of facilitating sharing, scientific publishers desperately try to protect their grip on the access to knowledge. Open access publication systems are a real threat to their lucrative business. That is why finding arguments against open access have become of vital importance. Similarly, countering these arguments is of vital importance for all of us who need [open] access.

Where are we now…

Where are we now…

Tim Gowers’ recently published blog post on his quest for information on subscription prices for Elsevier journals, using direct approaches (calling, writing publishers and libraries) and indirect approaches (demanding information based on the FOI), has caused a major stir. I recommend reading Michelle Brook’s very good overview in her recent blog. Some really astonishing facts have already come to light. It has for instance become apparent that different institutions pay very different prices for almost the ‘same’ deals. Also, universities that did not want to give the information responded often using the argument of commercial interests that had to be protected (full details for all cases in Tim Gowers blog post)

The ‘Big deals’ that ELSEVIER and other scientific publishers have made with libraries, form their insurance for long term profits. Making these deals subject to confidentiality actively prevents decrease of prices for publishing which would otherwise occur through market mechanisms. And better still from the publisher’s viewpoint, so-called hybrid journals that allow open-access publishing at a price, only add to the profits. Although publishers say that prices for subscriptions will be lowered when the proportion of paid for open access articles increase, there is as yet no sign of this. APC charges for open access articles in hybrid journals are on the average $900 more expensive than for full open access journals (as Dave Robberts reported on Google+).

A discussion thread on the above topics was started at the open access list of the OKFN. The following is an attempt to summarize this discussion here and at the same time asking everyone to participate in this project by adding data on subscription prices of his / her country to the growing dataset.

Charging for educational re-use…

One of the new items that came to light was the fact that Elsevier and other publishers receive extra fees that allow articles to be re-used for education. One would think that this situation would be covered by fair use policies but this is not the case. Esther Hoorn of Groningen University, NL provided the following link for an overview of worldwide rules on fair use and limitations for educational use: Study for Copyright Limitations and Exceptions for Educational Activities in North America, Europe, Caucasus, Central Asia and Israel (by Raquel Xalabarder, Professor of Law, Universitat Oberta de Catalunya (UOC), Barcelona, Spain). For instance in the Netherlands a fair renumeration for being able to use publisher copyrighted material for educational use has to be paid. And not very surprisingly this is done in the form of a nationwide big deal with the publishers that has to be negotiated every few years. According to Jeroen bosman of Utrecht University (NL) educational use has been granted by some publishers or is sometimes included in big deal contracts for subscriptions. The situation seems to be somewhat similar for other countries. According to Stuart Lawson In the UK it’s the Copyright Licensing Agency (CLA) that higher education institutions pay for a license to copy/re-use work for educational use. In 2013 the CLA had an income of £7,872,449 (https://www.cla.co.uk/data/pdfs/accounts/cla_statutory_accounts_year_to_2013.pdf, p.1). Not all of that was from educational institutions, but a lot of it was. Another few million pounds that is only spend because the original work wasn’t openly licensed….

According to Fabiana Kubke of the University Auckland, New Zealand does not have fair use either – it has fair dealing. There is a substantial amount that goes into licencing [] for education at the University of Auckland and those licences do not come out of the libraries budget. (which is why they don’t show in the libraries’ expenditures). This makes it all the more difficult to find out about the costs for this type of licensing. One would need to find out for different institutions what office is in charge of negotiating and paying for Copyright Clearing house costs..

From the examples that were discussed it seems that ‘fair use’ for education almost always has to be paid for. Only in the US and Canada fair use usually allows for 10% reproduction of copyrighted material for “fair use”. This situation of having to pay for re-use of publisher copyrighted material will probably not change very soon. This is at least the conclusion that can be drawn from the recent decision by the EU to block further discussion of WIPO’s (World Intellectual Property Organisation) case for harmonising international legislation on text/data-mining and other copyright issues.

Fully implemented open access would be an order of magnitude cheaper…

Three items were heavily discussed on the open access mailing list, apart from the cost of toll access publishing (what customers had to pay), the cost of open access publishing and open access to data which is necessary for text and datamining. Concerning the cost of publishing, It would be very useful to have data on the total expenditures for publishing per country. For the total cost data are available. According to Bjoern Brembs : “Data from the consulting firm Outsell in Burlingame, California, suggest that the science-publishing industry generated $9.4 billion in revenue in 2011 and published around 1.8 million English-language articles — an average revenue per article of roughly $5,000.” For the cost of open access publishing, Outsell estimates that the average per-article charge for open-access publishers in 2011 was $660.

Doing some quick calculations on the income of Elsevier for access to its digital content, Ross Mounce initially concluded that the cost per article was on the average $2800 USD over the subscription lifetime per article (70 years) . This was assuming a mere $ 0,5 Billion income per year for Elsevier on digital access to its content. The more realistic figure here probably is $1 Billion income, so the cost per article for a representative toll access business would be $5,600 in line with what Outsell estimated. The cost of publishing with one representative open access publisher (PloS) is at the moment around $1,350 per article.

This means that if we could switch to full open access for all articles immediately, we would save about 76% on publishing costs!

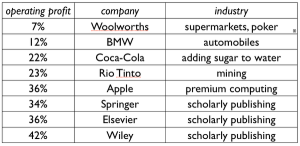

Profit of scholarly publishing vs. other industries pic.twitter.com/L3U6GWhM via @ceptional

Regarding the profit margins of toll access publishers, Stuart Lawson provided a link to a spreadsheet where he compared revenues, profits, and profit margins of academic publishers for the last two years( Link). There is nothing wrong with making profit as such. The scientific publisher profit margins are an issue because of their scale which would be impossible in a truly open market, where open access publishing would have a competitive advantage. However, the toll access publisher’s business of secret deals and long term contracts work very effectively against a transition to open access business models. The more data we can aggregate on publishing costs the more chance there is that open access can profit from its competitive advantage. Data for several individual countries, notably for Brazil, Germany, UK, Netherlands, USA, France and many more are already coming in.

What about open data…

Open data is another issue. As Peter Murray Rust wrote in a mail in the discussion thread of the open access mailing list :” the real danger [of] publishers moving into data where they will do far worse damage in controlling us http://blogs.ch.cam.ac.uk/pmr/2014/04/29/is-elsevier-going-to-take-control-of-us-and-our-data-the-vice-chancellor-of-cambridge-thinks-so-and-im-terrified/ “ and “Yes – keep fighting on prices. But prices are not the primary issue – it’s control.”. But scientific publishers make huge profits and money can buy a lot of influence. There is a growing awareness that data are the ‘gold’ of the 21st century. It is of vital importance for science and society that scientific data will not be controlled by a few big publishers who want to make as much profit as possible. Open data would be the best way to prevent this from happening. The signs in the US are positive in this respect, with Obama’s directive for open government data. The outlook in Europe is less good in view of the previously mentioned decision by the EU to block further discussion on harmonizing international legislation on text and data-mining.

What we can do….

Together we can uncover the real cost of scientific publishing. Some of you may know where to find figures for your country or you may be able to ask for information using Freedom Of Information legislation. You can add these data directly into a spreadsheet on GoogleDocs or report it on the WIKI . The aggregated data will allow us to lobby more effectively for the promotion of open knowledge. We will keep you apprised of developments through the open access mailing list and the blogs on this website. You can subscribe to the open access mailing list and/or other lists of OKFN at LISTINFO.

Open Access Working Group

Open Access Working Group